Warehouse-native Analytics – now with the power of Optimizely

NetSpring joins Optimizely, the world’s largest experimentation platform, making it the first DXP to offer warehouse-native analytics → Learn more

Secure, governed, trustworthy, and cost-effective analytics on your single source of truth

-

Single source of truth

No data copies outside your secure enterprise environment.

Comply with corporate security, privacy, and governance policies.

-

Trust in Numbers

Single analytics tool with the ability to verify the SQL behind every analytical computation.

Build trust in your analytics and make data-driven decisions with confidence.

-

Cost-effective Query Processing

Push highly cost-effective and performance-optimized queries down to the data warehouse.

Lower costs with no ETL or reverse ETL, and leveraging elastic compute to pay only for insights, not event volumes.

Understand product usage patterns to optimize user engagement and retention

-

Behavioral Analytics Templates

Rich library of reporting templates for event segmentation, retention, funnel, path, cohort etc.

Quantify and visualize user behavior without SQL or dependence on data teams.

-

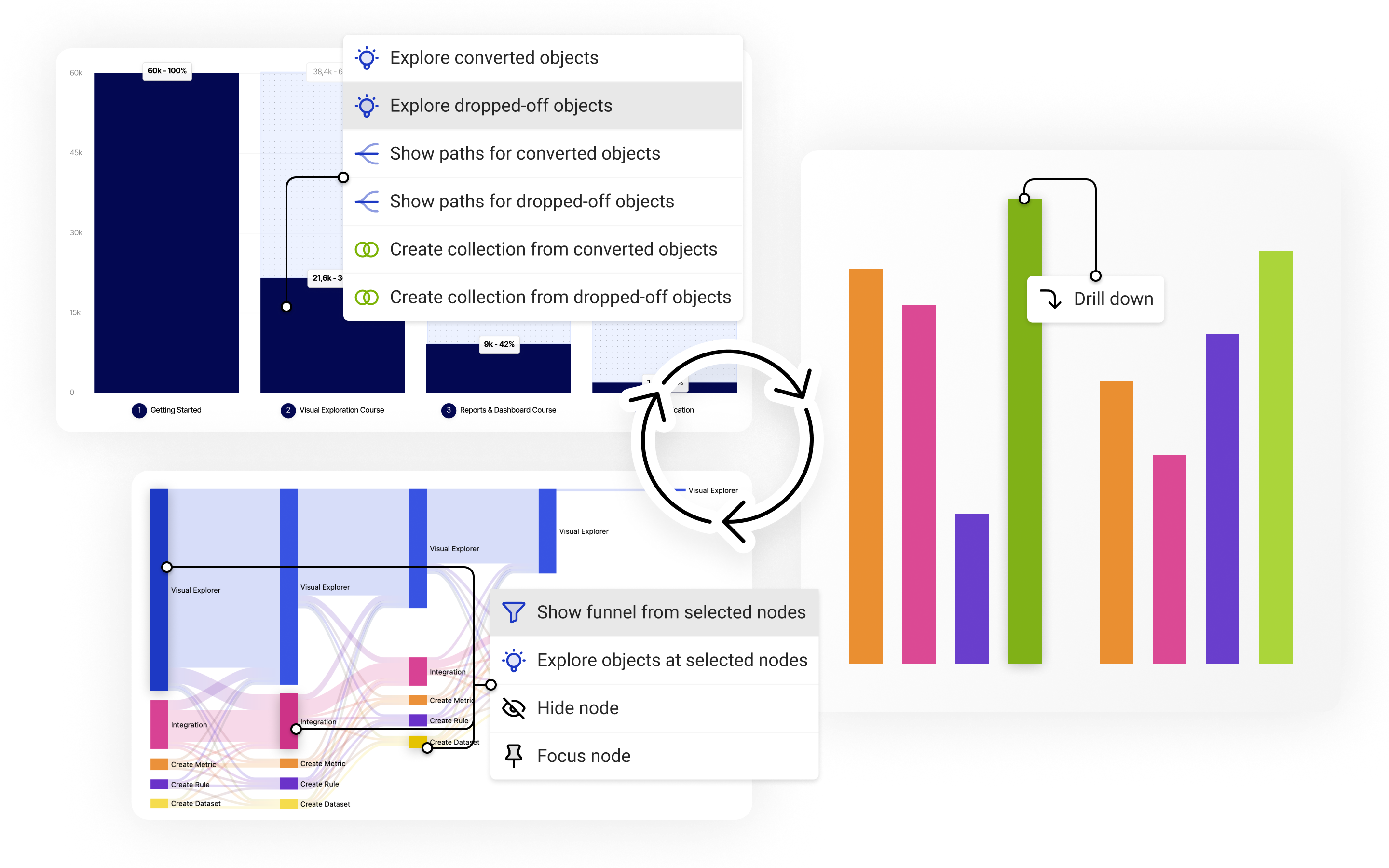

Deep-dive Exploration

Fork off from templated reports to answer the next question.

Identify drivers of user behavioral patterns through self-service visual exploration.

-

Business-Impactful Analytics

Analyze across product events and all customer business data in the warehouse.

Quantify impact of product feature usage on revenue and support.

Analyze the entire customer journey from acquisition to retention – to optimize growth metrics

-

Customer 360

Visualize entire customer journeys end-to-end – in-product and outside.

Single, consistent view of your customers for all product- and customer-oriented teams.

-

Multi-stream Analysis

Analyze across across all customer touch points and channels.

Optimize marketing campaigns to acquire your most profitable customers.

-

Customer Experience Monitoring

Continuously monitor key KPIs.

Alert on experience degradations and revenue opportunities.

Self-service ad hoc exploratory analytics for business teams

-

Reports and Dashboards

Visualizations and dashboards with templates, advanced configuration, and parameterization.

Self-service authoring of reports and dashboards by business teams, with secure sharing and collaboration.

-

Ad Hoc Exploration

Free-form reporting across all data in the warehouse, using a rich library of UI-driven templates.

Ease of use of a visual exploration interface with the optional power of SQL, to answer analytical questions of any complexity.

-

Analytic Applications

Semantic modeling with light-weight annotations and UI-driven templates.

Build and publish use case and domain specific applications that accurately reflect the complexity of your business.

Warehouse-Native

Secure, governed, trustworthy, and cost-effective analytics on your single source of truth

-

Single source of truth

No data copies outside your secure enterprise environment.

Comply with corporate security, privacy, and governance policies.

-

Trust in Numbers

Single analytics tool with the ability to verify the SQL behind every analytical computation.

Build trust in your analytics and make data-driven decisions with confidence.

-

Cost-effective Query Processing

Push highly cost-effective and performance-optimized queries down to the data warehouse.

Lower costs with no ETL or reverse ETL, and leveraging elastic compute to pay only for insights, not event volumes.

Product Analytics

Understand product usage patterns to optimize user engagement and retention

-

Behavioral Analytics Templates

Rich library of reporting templates for event segmentation, retention, funnel, path, cohort etc.

Quantify and visualize user behavior without SQL or dependence on data teams.

-

Deep-dive Exploration

Fork off from templated reports to answer the next question.

Identify drivers of user behavioral patterns through self-service visual exploration.

-

Business-Impactful Analytics

Analyze across product events and all customer business data in the warehouse.

Quantify impact of product feature usage on revenue and support.

Customer Journey Analytics

Analyze the entire customer journey from acquisition to retention – to optimize growth metrics

-

Customer 360

Visualize entire customer journeys end-to-end – in-product and outside.

Single, consistent view of your customers for all product- and customer-oriented teams.

-

Multi-stream Analysis

Analyze across across all customer touch points and channels.

Optimize marketing campaigns to acquire your most profitable customers.

-

Customer Experience Monitoring

Continuously monitor key KPIs.

Alert on experience degradations and revenue opportunities.

Business Intelligence

Self-service ad hoc exploratory analytics for business teams

-

Reports and Dashboards

Visualizations and dashboards with templates, advanced configuration, and parameterization.

Self-service authoring of reports and dashboards by business teams, with secure sharing and collaboration.

-

Ad Hoc Exploration

Free-form reporting across all data in the warehouse, using a rich library of UI-driven templates.

Ease of use of a visual exploration interface with the optional power of SQL, to answer analytical questions of any complexity.

-

Analytic Applications

Semantic modeling with light-weight annotations and UI-driven templates.

Build and publish use case and domain specific applications that accurately reflect the complexity of your business.

TRUSTED BY

Why customers switch to NetSpring from

The best of templated product analytics and ad hoc exploratory BI. Work natively on all your product and customer data in the warehouse, with no siloed copies. Cost-effective, trustworthy, and business-impactful analytics.

BENEFITS

Product & Growth Teams

Acquire the best customers. Provide delightful product experiences. Profit.

Self-Service, Every Answer

Identify features and behaviors that drive growth or churn. Self-serve, with no SQL or dependence on data teams.

Trust in Numbers

Build trust with consistent and fully auditable insights, in a single analytics tool working off the single source of data truth.

Revenue-Impactful Analytics

Tie campaigns and product engagement to revenue. Acquire high-value customers. Build profitable products.

BENEFITS

Data Teams

Get the best ROI for your analytics.

Low Cost

Store all your event data in inexpensive cloud object stores. Leverage elastic compute of cloud warehouses to pay only for insights, not event volumes.

Ease of Data Management

Single source of truth for all data in the warehouse. Low TCO with no ETL or reverse ETL to/from proprietary stores. Avoid time spent in debugging inconsistencies in numbers across silos.

Governed Self-Service

Provide business teams governed access to data with a self-service tool. Free up technical resources by avoiding repeated one-off requests from business.

Unlock more business value from your product and customer data

Learn why companies are upgrading their analytics platforms.

Testimonials

-

NetSpring is the Holy Grail of product analytics. You don’t have to move your data anywhere.

It sits directly on your data warehouse, looks across all data sets, and supports both traditional BI analysis and modern event-centric product analytics. It is also self-service, so you can expand the reach and impact to everyone in the organization, not just technical teams.

And when it comes to cost, NetSpring is cost-efficient and scales with our business.

Chang Yu, VP of Product -

Relative to our peer Web3 companies, NetSpring gives us an important competitive advantage. With NetSpring working directly on our data warehouse, we now have a view into retention and activation others don’t have.

We can track cohort-specific KPIs, then easily build and test hypotheses that are leading to improvements to our platform, especially around the first user experience. The ability to segment our creators by specific behaviors has helped us identify which segments matter most. This was a level of granularity previously hidden from us.

Matt Alston, Co-Founder & CEO -

In subscription businesses, you have to closely follow the customer lifecycle. You may be looking at feature adoption or churn, at how to create more value, or how to make customers aware of new products. And internally, for sales, marketing, or customer support departments – providing insights.

Basically with this cloud architecture we have an ability to look at product telemetry data as well as business transaction data. The magic is when we intersect these and do cohort analysis. We can slice and dice from many different perspectives, and that’s where the insights come.

Awinash Sinha, CIO -

You would think that if I handed you a product analytics platform, I would be excited PMs are looking at retention rate. But only half of the cancellations happened inside the product. The other half happened because somebody picked up a phone to cancel.

No events were ever created, and as such, our retention curves were materially misstated. That immediately starts to undermine the credibility of any first-generation tool.

John Humphrey, Former Head of Data Platform Product -

How can we make the experience of buying groceries on Instacart not just more convenient, but also more efficient and delightful than shopping in the store?

To inspire product strategy, we spend a lot of time trying to understand patterns of shopping so we can build personalized experiences.

Anahita Tafvizi, VP and Head of Data Science & Business Operations -

Our product managers and growth managers rely heavily on data to see how customers are using the platform. How frequently are they using? What capabilities are they using? Which capabilities are resonating more or less them them.

That informs our product roadmap.

Saket Srivastava, CIO -

Cloud data warehouses like Snowflake, Redshift, BigQuery, Databricks, and Azure have become the de facto place where businesses pull data out and use it for a business purpose.

So the more compute you push on the cloud data warehouse, the closer it stays to the ecosystem, and the easier it is for anyone to even consume such a system.

Sanjay Agrawal, Co-Founder & CEO -

Amplitude and Mixpanel are basically a time series database underneath, with a UI. Time series data tends to be write once.

You need to take advantage of those techniques data warehouses are born with. It makes sense to put this into a data warehouse, rather than a custom database like Datadog, Mixpanel, or Amplitude. Plus you have additional benefits from it because you can cross reference that data with the rest of the business data.

Nikita Shamgunov, Founder & CEO -

We want to look at product funnels and customer journeys, but then combine that with Salesforce data. But it was surprisingly hard to do with a lot of these cloud product analytics tools. They’re only designed to ingest a specific kind of data. And if you want to combine other data sources, it becomes really fragile and complicated to set up those data pipelines.

Warehouse-native enables that and unlocks that set of use cases. Why do you need to ship everything to another vendor to do specific parts of your analytics? It just does not make good sense.

Soumyadeb Mitra, Founder & CEO -

True centralization aggregates data from all channels, not just what someone clicked on the website: offline, IoT, support, etc.

Let’s have shipping and returns data, and everything else that is required to properly instrument a real business – put in a single place, which is governed, secure, complete, and accurate.

Jason Davis, Founder & CEO -

A core focus of DCP Midstream is our commitment to operational excellence.

Leveraging NetSpring to analyze real-time data gives our team members key information to prioritize critical work, support quick response, and more effectively serve our customers.

Rob Sadler, Group Vice President of Energy, Transition & Transformation

Getting started with NetSpring is easy.